The Grounded Turing Test

Intelligence is multi-faceted.

Intelligence is a multi-faceted concept that seems to evade definition. We would go as far as to say it is an error even to attempt to define the term intelligence, saying instead, we know it when we see it. Over the past 50 years, there have been many attempts to define what it means to be intelligent, and many computational systems that demonstrate various forms of intelligence. The arguments around what it means to really be intelligent are nothing new: Rod's paper, Elephants Don't Play Chess, was in part a reaction to the fact that chess engines with alpha/beta pruning fail to generate long-horizon robotic behavior in the physical world. One reason people are excited about LLMs is that they demonstrate many facets of intelligence at once: they have some ability to play chess, along with writing blog posts and serving as an in-pocket tour guide. And technologies like π0.5 show promise in expanding these capabilities to the physical world. Our aim in defining the Grounded Turing Test was to be specific about certain capabilities that LLMs don't (yet) have.

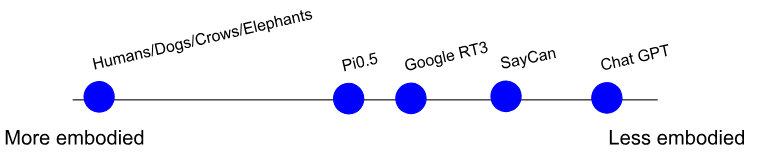

From that perspective, there is a facet that is missing from ChatGPT: embodiment. Crows, elephants, and chimpanzees have behaviors associated with this facet of intelligence, without having spoken natural language at all. From a capabilities perspective, we mean processing high-dimensional, high-framerate sensor input and producing high-dimensional, high-frequency actuator output to produce long-horizon goal-directed behavior in the physical world. We make two claims: 1) embodiment is an important facet of intelligent behavior, and 2) LLMs like ChatGPT lack embodiment. More precisely, embodiment is a spectrum, and LLMs are far on one side of the spectrum, compared to humans, dogs, and crows, and because of this, they fail at a number of behaviors today.

Our book chapter enumerates the ways an embodied intelligent robot can use language, and then describes the research problems inherent to each. To pass the Grounded Turing test, a system must support all of these capabilities rather than any single one. It must pass in the physical world, at the very least, through a mobile robot equipped with a camera, text input, text output, and manipulators.

Our work falls into a family of approaches that try to extend the Turing Test to different embodiments. Unlike most previous approaches, we try to be specific about the behaviors and types of language use that are important. Srivastava et al. (2023), in contrast, propose a text-based, non-embodied extension to the Turing test, called the Beyond the Imitation Game Benchmark (BIG-bench), designed for the age of large language models. BIG-bench consists of 204 text-based tasks from a diverse array of domains, ranging from linguistics to biology to common-sense reasoning. Current LLMs perform poorly on these tasks in an absolute sense; although performance improves with model size, it remains far below the level of human raters (Mirzadeh et al., 2024). But even if (when!) models pass these benchmarks, we argue that because the resulting model will not be embedded in high-dimensional space-time with goal-based behavior, they will not be able to demonstrate many specific behaviors associated with embodiment.

Rather than exploring all the types of language we enumerate, we'll conclude with a specific example: "Pick up the red block that's on the table." In one sense, this is the simplest and most easily solved task: surely a large behavior model can pick and place a clearly segmented primary-colored object; π0.5 is already performing much more complex tasks like making beds! But when you consider the embodied version, where the red block and the table aren't already in the field of view, it's much more challenging. Consider picking up the red block that Stefie's three-year-old lost and putting it away before her husband steps on it. (Not that this happened recently or anything.) Or "Pick up the kitten." To perform this kind of task, an embodied agent needs to have an awareness of space and time, to know where to search, to reason about what has and hasn't been searched, as well as long-horizon behaviors, such as opening a closet door to see if the cat is trapped inside. Rob Brooks gave a related example: an AI that "seems as intelligent, as attentive, and as faithful as a dog." One of the most salient aspects of a dog's attentiveness is its ability and drive to find their person, wherever they may be.

Finally, we make this claim at this moment. We underestimated the power and success of LLMs, so we are reluctant to say this won’t be solved soon. But - we are trained as researchers to identify unsolved problems and then solve them! So what are the most important problems in your field? And why aren't you working on them?